HIPAA and AI: What Medical Practices Need to Know

Using AI in healthcare requires careful HIPAA compliance. Here's a practical guide to evaluating AI vendors and implementing AI safely in your practice.

HIPAA and AI: What Medical Practices Need to Know

The first question every medical practice asks about AI: “Is it HIPAA compliant?”

The honest answer: it depends entirely on how you implement it.

AI technology itself isn’t inherently compliant or non-compliant. It’s a tool. The compliance comes from how you select vendors, configure systems, train staff, and govern data. A well-implemented AI solution can be fully HIPAA compliant. A poorly implemented one—even from a reputable vendor—can create significant liability.

Here’s what medical practices need to understand about AI and HIPAA.

The Basic Framework

HIPAA compliance for AI follows the same principles as any other technology handling protected health information (PHI):

The Privacy Rule

- PHI can only be used for treatment, payment, or healthcare operations

- Minimum necessary standard applies (only access what’s needed)

- Patient authorization required for other uses

- Notice of Privacy Practices must be updated if AI changes how you handle data

The Security Rule

- Administrative safeguards (policies, training, risk analysis)

- Physical safeguards (facility access, device security)

- Technical safeguards (access controls, encryption, audit trails)

The Breach Notification Rule

- Obligation to report breaches

- Applies whether breach comes from human error or AI system failure

Business Associate Agreements

- Any vendor handling PHI on your behalf must sign a BAA

- This includes AI vendors whose systems process patient data

When Is a BAA Required?

Not every AI implementation requires a BAA, but many do.

BAA Required

- AI system processes, stores, or transmits PHI

- AI has access to patient records

- AI communicates directly with patients in ways that might include PHI

- AI vendor provides services involving PHI on your behalf

BAA May Not Be Required

- AI operates only on de-identified data meeting HIPAA standards

- AI handles only non-PHI information (office hours, directions, etc.)

- AI runs entirely within your existing HIPAA-compliant infrastructure without vendor access

When in doubt, require a BAA. It’s better to have one you don’t need than to lack one you do.

Evaluating AI Vendors for Compliance

Not all AI vendors understand healthcare compliance. Here’s how to evaluate them:

Essential Questions to Ask

1. Will you sign a Business Associate Agreement?

If they hesitate or don’t know what a BAA is, walk away. This is table stakes.

2. Where is data stored and processed?

Understanding data geography matters:

- US-based storage is simpler for compliance

- Cloud providers must also be HIPAA compliant

- Some states have additional requirements

3. What security certifications do you have?

Look for:

- SOC 2 Type II certification

- HITRUST certification (gold standard for healthcare)

- Specific HIPAA attestations

4. How is data encrypted?

Minimum requirements:

- Encryption in transit (TLS 1.2 or higher)

- Encryption at rest (AES-256 or equivalent)

- Key management practices

5. What access controls exist?

- How do they control who can access data?

- Is there role-based access?

- How are access permissions managed?

6. What audit capabilities exist?

- Can you see who accessed what data and when?

- How long are audit logs retained?

- Can you get audit reports?

7. What happens if there’s a breach?

- What’s their incident response process?

- How quickly will they notify you?

- What support do they provide for breach response?

8. What happens to data if you end the relationship?

- How is data returned or destroyed?

- What’s the timeline?

- How is destruction verified?

Red Flags

Be cautious if vendors:

- Can’t clearly explain their security practices

- Have never worked with healthcare clients

- Won’t sign a BAA without extensive negotiation

- Can’t provide compliance documentation

- Have had publicized security incidents they haven’t addressed

- Use vague language about “HIPAA compliance” without specifics

Implementation Safeguards

Even with a compliant vendor, you need internal safeguards:

Minimum Necessary Principle

AI should only access the data it needs for its function. Questions to ask:

- What specific data elements does the AI access?

- Why is each element necessary?

- Can we limit access further without losing functionality?

Access Controls

Who in your organization can:

- Configure the AI system?

- Access AI-generated outputs?

- View audit logs?

- Modify AI parameters?

Limit access to those who need it, and document access decisions.

Audit and Monitoring

Implement ongoing monitoring:

- Regular review of AI activity logs

- Periodic access reviews

- Monitoring for unusual patterns

- Documentation of reviews conducted

Training

Staff need to understand:

- What the AI system does and doesn’t do

- Their responsibilities regarding the AI

- How to report concerns or incidents

- What not to input into AI systems

Incident Response

Update your incident response plan to include AI:

- How to identify AI-related incidents

- Who to contact at the vendor

- Documentation requirements

- Communication protocols

Learn more about IT security for medical practices →

Special Considerations for Different AI Types

Different AI applications have different compliance considerations:

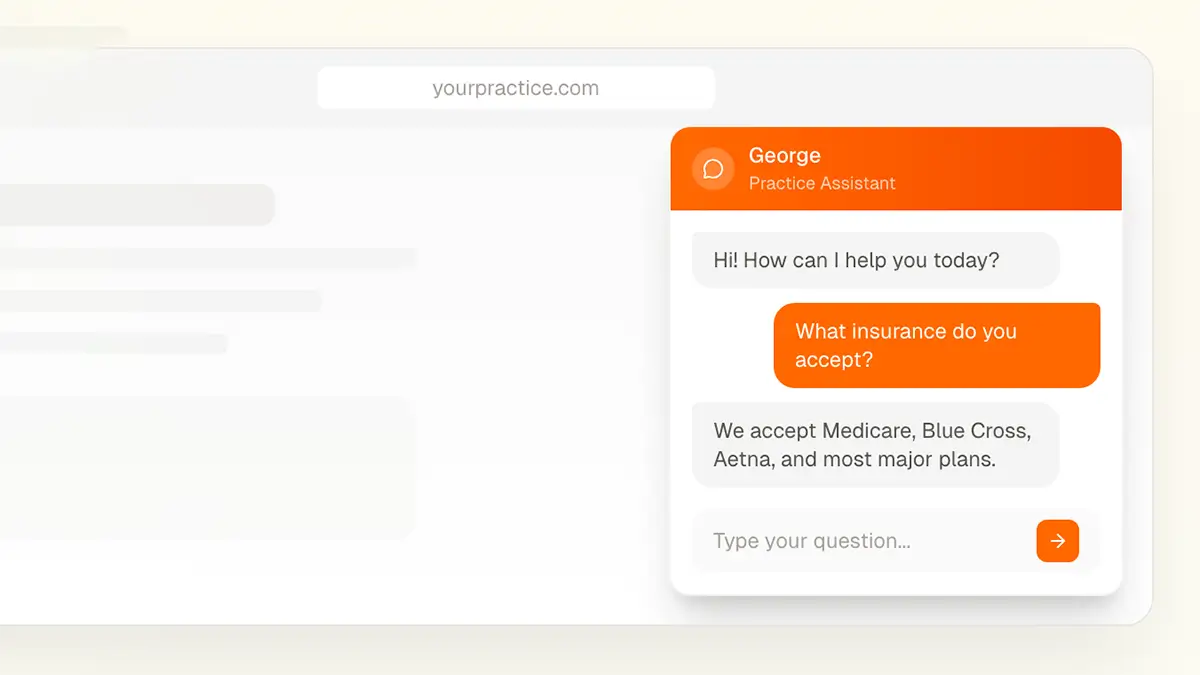

Chatbots and Virtual Assistants

Lower risk if:

- Only handling non-PHI queries (hours, location, general info)

- No integration with patient records

- No patient authentication

Higher risk if:

- Patients might share health information in chat

- Integration with scheduling or patient records

- Accessed through patient portal

Safeguards:

- Clear disclaimers about not sharing personal health information

- No logging of conversations that might contain PHI (or secure logging if needed)

- Clear escalation to human staff for clinical questions

Workflow Automation

Higher risk because:

- Often requires access to clinical documentation

- May process insurance and billing information

- May handle prior authorizations with PHI

Safeguards:

- Robust BAA with vendor

- Minimum necessary access to records

- Audit trails for all data access

- Human review of automated outputs

Custom AI Development

Highest complexity because:

- You’re responsible for design decisions

- Training data may include PHI

- More variables to control

Safeguards:

- HIPAA expertise in development team

- Privacy-by-design approach

- De-identification of training data where possible

- Thorough testing before deployment

Learn more about custom AI development →

The Training Data Question

One unique consideration for AI: training data.

What’s the Concern?

AI systems learn from data. If that data includes PHI:

- Who owns the data used for training?

- Is your practice’s data being used to train general AI?

- Could PHI be recoverable from the AI model?

Questions to Ask Vendors

- Is our data used to train AI models that serve other customers?

- How is training data de-identified?

- What contractual protections exist around training data use?

- Can we opt out of having our data used for training?

Best Practices

- Require clear contractual language about data use

- Prefer vendors with strong data use limitations

- Understand the difference between “your data improves the AI” and “your data stays yours”

Documentation Requirements

Document your AI compliance efforts:

Vendor Assessment

- Record of vendor evaluation

- BAA execution

- Security documentation received

- Risk assessment conducted

Implementation

- Configuration decisions and rationale

- Access control decisions

- Training conducted

- Policies updated

Ongoing Operations

- Audit log reviews

- Access reviews

- Incident reports

- Performance assessments

This documentation demonstrates due diligence if questions arise later.

Common Compliance Mistakes

Practices get into trouble when they:

1. Assume “AI” means “automatically compliant”

AI is a tool. Compliance comes from implementation.

2. Skip the BAA because “it’s just a chatbot”

Even chatbots may process PHI if patients share health information.

3. Over-share data with AI systems

Give AI only what it needs, not blanket access to records.

4. Forget about training

Staff need to understand how to use AI compliantly.

5. Set and forget

AI systems need ongoing monitoring, not just initial setup.

6. Ignore the vendor’s other customers

A breach at your AI vendor affects you too. Evaluate their overall security posture.

Working With Your Compliance Team

If your practice has compliance personnel or uses outside compliance consultants:

Involve them early

- Before selecting vendors

- During implementation planning

- When drafting policies

Provide them with

- Vendor security documentation

- Proposed AI use cases

- Data flow diagrams

- Vendor BAAs

Get their sign-off on

- Vendor selection

- Implementation approach

- Updated policies

- Training materials

The Bottom Line

HIPAA compliance for AI isn’t fundamentally different from compliance for any other technology. The principles are the same: appropriate safeguards, business associate agreements, minimum necessary access, and ongoing monitoring.

The key differences are:

- AI vendors vary widely in healthcare sophistication

- Training data raises unique privacy questions

- The technology is evolving rapidly, requiring ongoing attention

Don’t let compliance concerns prevent you from exploring AI—just approach it thoughtfully with proper safeguards.

Need Help With AI Compliance?

We help medical practices evaluate AI solutions and implement them with appropriate HIPAA safeguards.

Contact us to discuss AI compliance →

Or call us directly: (678) 824-2420